High Availability Through Intelligent Load Balancing Strategies

High Availability Through Intelligent Load Balancing Strategies

With the expansion of servers for diverse applications and data centers full of server farms, load balancing has become a vital tool for the organizations. As networks connecting servers to computers belonging to employees, customers, or suppliers become mission critical, businesses must ensure scalability and high availability for all components, beginning with the edge routers that connect to the Internet and ending with the database servers at the back end.

1. What is Load Balancing and Why Does it Matter?

Load balancing is a critical process used to efficiently distribute incoming network traffic across multiple servers or resources to ensure high availability and scalability. In a nutshell, it's a way of ensuring single server is overwhelmed with traffic and that all of your resources are being used efficiently.

2. The Benefits of Load Balancing

Load balancing has the potential to improve the performance, reliability, and availability of your applications or services. By distributing traffic across multiple servers, it helps to ensure that no single server becomes a bottleneck, reducing response times, minimizing downtime, and avoiding service disruptions. This, in turn, helps to improve customer satisfaction, user experience, and the overall reliability of your application.

Enhancing the performance, dependability, and accessibility of applications or services is the aim of load balancing. Load balancing ensures that no one server becomes a bottleneck and that each server is utilized effectively by spreading traffic across numerous servers. This can assist to speed up responses, cut down on downtime, and prevent service interruptions.

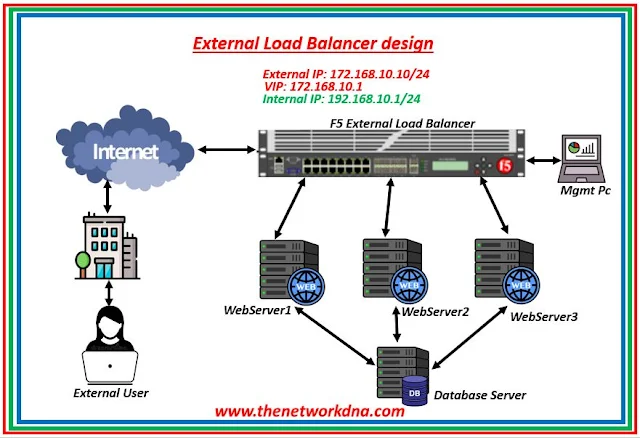

|

| Fig 1.1- Load Balancer design |

3. Load Balancing Techniques

When running a web application, it's important to ensure that the servers are being used efficiently and that clients are receiving a fast response. Load balancing techniques help to achieve this goal by distributing requests across a group of servers.

Round Robin

In this method, requests are distributed evenly across a group of servers in a cyclic manner. For example, if you have four servers, each server would receive a request after the fourth request has been sent.

Least Connections

In this method, requests are sent to the server with the fewest active connections. This helps to ensure that each server is used equally, and avoids overloading any particular server.

Fastest

In this method, requests are sent to the server with the fastest response time or lowest latency. This helps to ensure that clients receive a faster response and that server resources are used efficiently.

IP Hash

This method uses the client's IP address to determine which server should receive the request. A hash function is applied to the IP address, and the result is used to select the server. This method ensures that requests from the same client are sent to the same server, which can help to maintain session state and improve performance.

Ratio

In this method, requests are distributed based on a ratio or weight assigned to each server. For example, a server with a higher ratio will receive more requests than a server with a lower ratio.

Observed

In this method, the load balancer observes the response times of each server over time and directs traffic to the server with the fastest response time. This helps to ensure that clients always receive the fastest response, and allows the system to adapt to changes in the environment.

Predictive

In this method, the load balancer uses statistical algorithms to predict which server will have the fastest response time based on past performance. By using predictive algorithms, the system can make more informed decisions about which server to send requests to, and can adjust to changing traffic patterns.

4. Load Balancing Strategies

Load balancing is a key component of any application or service that needs to scale and remain available. Different strategies can be used to distribute traffic across multiple servers, to ensure that performance and availability are maintained. Here, we'll look at some of the most common load balancing strategies.

Active-Passive Failover

In this strategy, one server is designated as the primary server, and another server is designated as the backup or standby server. The primary server handles all incoming traffic, and if it fails, the backup server takes over. This strategy is simple and reliable, but does not provide any performance or availability benefits.

Active-Active

In this strategy, multiple servers are actively handling traffic at the same time, with load balancing distributing traffic across all servers. This strategy can improve performance and availability, as traffic can be dynamically distributed based on server capacity and traffic patterns. By using multiple servers in parallel, any single server failure does not cause downtime or performance issues.

Global Load Balancing

In this strategy, traffic is distributed across multiple data centers or regions, to ensure that traffic is directed to the closest or fastest data center. This strategy can help to reduce latency and improve performance for geographically distributed applications or services. By sending traffic to the closest or fastest server, users will experience the best possible performance and availability.

Content-Based Routing

In this strategy, requests are directed to different servers based on the content of the request. For example, requests for images or video may be sent to one group of servers, while requests for database queries may be sent to another group of servers. This can help to improve performance and scalability by sending requests to the best-suited server or group of servers.

Layer 4 vs. Layer 7 Load Balancing

Layer 4 load balancing operates at the transport layer (TCP/UDP), while Layer 7 load balancing operates at the application layer (HTTP/HTTPS). Layer 4 load balancing is faster and simpler, but Layer 7 load balancing can provide more advanced features, such as SSL termination and content switching. Choosing the right strategy depends on the specific needs and requirements of the application or service.

Virtual IP Address

In this strategy, all servers in the load balancing cluster share a virtual IP address, which is used to distribute traffic. This allows for easier scaling and failover, as all servers can be treated as a single entity. This is a great option for applications that need to scale quickly, as the virtual IP address can be quickly adjusted to accommodate the changing traffic patterns.

Session Persistence

This strategy ensures that requests from the same client are always sent to the same server, to maintain session state and avoid issues with session data. This can be implemented using cookies, source IP address, or other client identification techniques. This is an important feature for applications that require a consistent experience across multiple sessions or requests, such as ecommerce or banking applications.

With the right load balancing strategy, applications and services can be reliably and efficiently scaled to meet the demands of users. Understanding and implementing the right strategy is essential for any application or service that needs to remain available and performant.

Continue Reading...

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Load balancers are important part of the Network ? - The Network DNA

22 Basic F5 Load Balancer interview questions - The Network DNA

F5 Load Balancer : Upgrade the SSL Certificate - The Network DNA

NSX-T Load balancer Deployment Topology - The Network DNA

VMware NSX Advanced Load Balancer - The Network DNA

VMware NSX-T Load Balancer, Virtual Server, Pool & Monitor Components

Azure Load Balancer Vs Kemp LoadMaster - The Network DNA

VMware : NSX-T Load Balancer Vs AVI Load Balancer - The Network DNA

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++